Owen Jow

Graphics & Image Processing

- 184[1] - Rasterizing SVG Files

- 184[2] - Mesh Manipulation

- 184[3] - Path Tracing

- 184[4] - Lens Simulation

- 184[5] - Fluid Simulation

- 194[1] - Colorization via Alignment

- 194[2] - Pinhole Camera

- 194[3] - Frequencies

- 194[4] - Seam Carving

- 194[5] - Face Morphing

- 194[6] - Light Fields

- 194[7] - Image Stitching

- 194[8] - Single View Modeling

- 194[9] - Video Textures

Frequencies

There are a lot of things going on in this project.

The first of these is a sharpening implementation. As it happens, images are perceived as “sharp” due to a prevalence of high frequencies, or edges. Thus, to sharpen an image all we have to do is extract its high frequencies… and then just add them back in again.

|

|

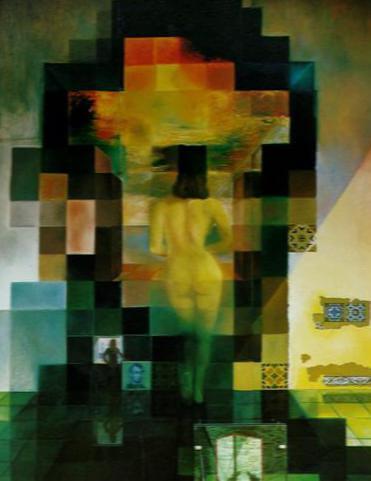

Next, we take it upon ourselves to create a hybrid image. A hybrid image combines low frequencies with disparate high frequencies, such that from afar it is only possible to see the low-frequency content but from up close it is more natural to focus on the high frequencies. We generate a hybrid image as the sum of these two components.

|

|

|

After that, we assemble Gaussian and Laplacian stacks of our images, which highlight the various types of observable frequencies.

|

|

|

|

|

Finally, we attempt to seamlessly merge pairs of images using a multiresolution blending technique. Essentially, we linearly combine the images at different layers of their Laplacian stacks (using a Gaussian masking stack as weights), and eventually sum up all of the results.

|

|