Owen Jow

Graphics & Image Processing

- 184[1] - Rasterizing SVG Files

- 184[2] - Mesh Manipulation

- 184[3] - Path Tracing

- 184[4] - Lens Simulation

- 184[5] - Fluid Simulation

- 194[1] - Colorization via Alignment

- 194[2] - Pinhole Camera

- 194[3] - Frequencies

- 194[4] - Seam Carving

- 194[5] - Face Morphing

- 194[6] - Light Fields

- 194[7] - Image Stitching

- 194[8] - Single View Modeling

- 194[9] - Video Textures

Path Tracing

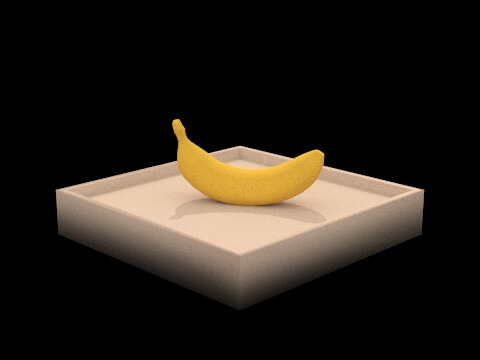

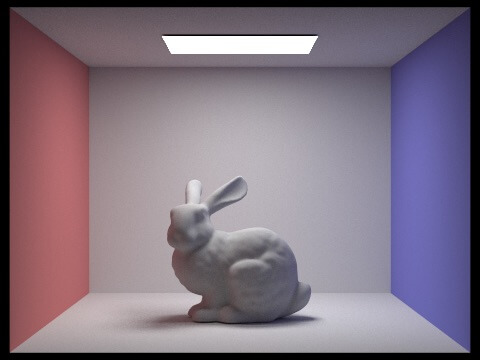

In this project, we implemented a physically-based renderer – a program that produces photorealistic images from scene descriptions by modeling the transport of light. Put shallowly, the renderer traces rays from the eye into the environment and represents the objects they hit as output pixels.

|

|

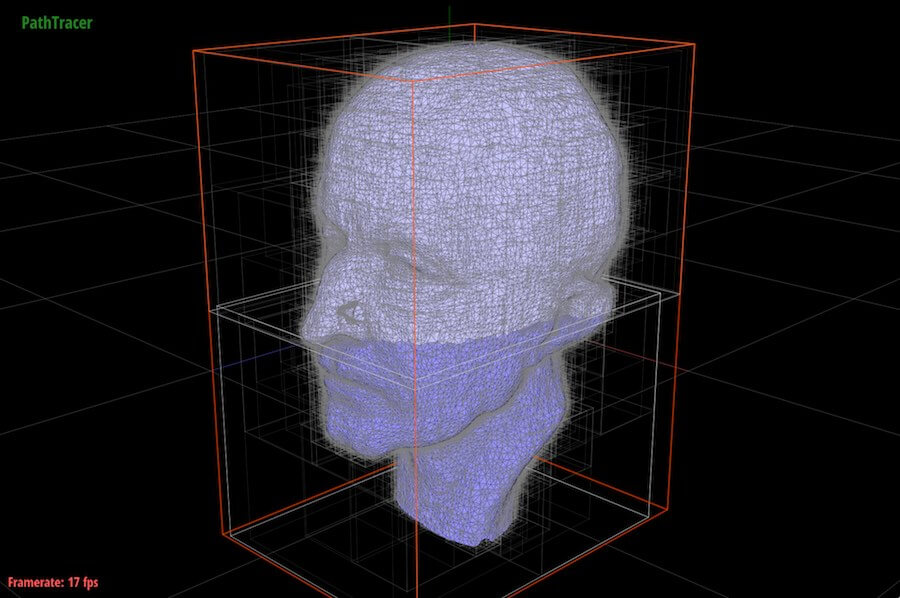

That being the case, in a naïve implementation we’re forced to run an intersection test between every environment-bound ray and every geometric primitive in the environment. In order to speed this up, we partition the primitives into different spatial regions (“bounding volumes” / BVs), each of which contains a lot of other BVs, and collision-test every ray against a single BV at a time. If a ray doesn’t collide with a BV, it doesn’t collide with any of the primitives (or other BVs) inside that BV. Thus, at each step we’re able to eliminate a huge amount of the search space.

Once we make an initial intersection, we need to calculate the lighting at that point. We do so by sending a ray straight toward each light (direct illumination) and also by bouncing randomly sampled, randomly terminated BSDF rays around the scene (indirect illumination). In each case, we apply the accumulated illumination at the intersected point to the current screen-space pixel.

This is called path tracing, incidentally, because by bouncing rays around the scene we simulate tracing the full path of light from its sources to the eye. (For the unfamiliar, we technically do trace rays from the eye to the light sources. It’s computationally infeasible to travel in the correct direction; an overwhelming majority of rays wouldn’t hit the eye at all.)

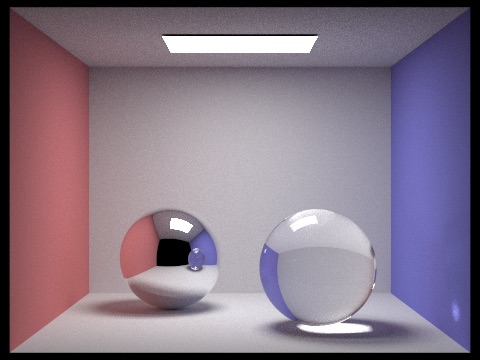

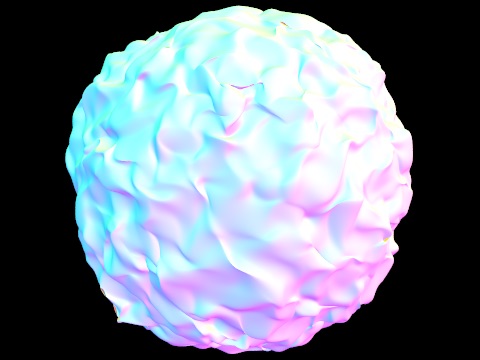

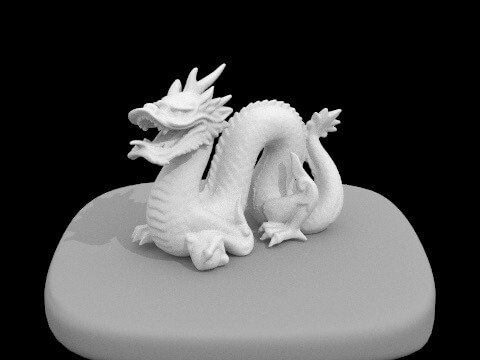

Upon completion of the path tracing algorithm, we are able to produce images as such.

|

|

|

As icing on the cake, we also reflect and refract in order to properly implement the BSDFs of various materials.